Many-Channel AXI DMA

Home > Interface IP > PCI Express Controller IP > Many-Channel AXI DMA

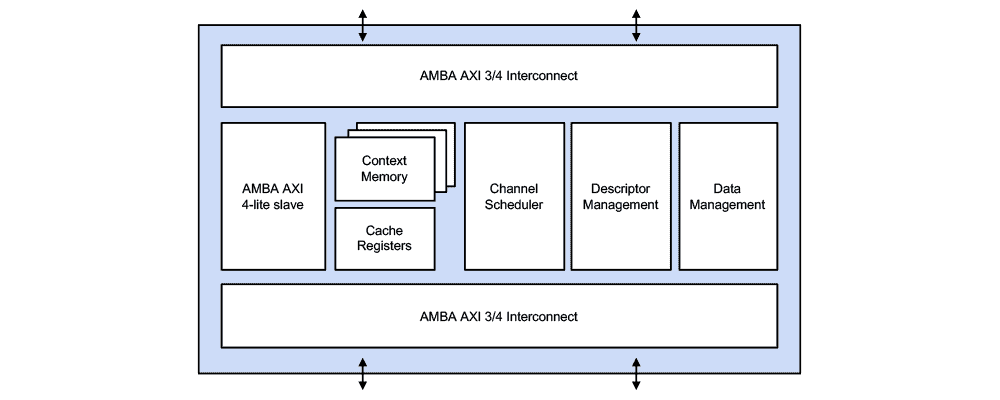

The Many-Channel AXI DMA (formerly vDMA-AXI) IP Core implements a highly efficient, configurable DMA engine specifically engineered for Artificial Intelligence/Machine Learning (AI/ML) optimized SoCs and FPGAs that power tomorrow’s virtualized data centers.

How the Many-Channel AXI DMA Core Works

The core is intended to be used as a centralized DMA allowing concurrent data movement in any direction, and is particularly suited for many-core SoCs such as AI/ML processors. The Many-Channel AXI DMA Core is based on a novel architecture that allows hundreds of independent and concurrent DMA channels to be distributed among a number of Virtual Machines (VMs) or host domains without sacrificing on performance and resource utilization. The core is optimized to deliver the highest possible throughput for small data packet transfers, which is a common weakness in traditional DMA engines.

The Many-Channel AXI DMA core can be attached externally to the PCIe 5.0 Controller for a scalable enterprise class PCIe interface solution for compute, network, and storage SoCs.

Data Center Evolution: Accelerating Computing with PCI Express 5.0

The PCI Express® (PCIe) interface is the critical backbone that moves data at high bandwidth between various compute nodes such as CPUs, GPUs, FPGAs, and workload-specific accelerators. The rise of cloud-based computing and hyperscale data centers, along with high-bandwidth applications like artificial intelligence (AI) and machine learning (ML), require the new level of performance of PCI Express 5.0.

Solution Offerings

General/Performance Features

- Up to 256 DMA channels

- Up to 6 Virtual Machines or domains

- Configurable AMBA AXI3/4 interfaces with 256-bit or 512-bit data path

- Up to 64 outstanding read and write requests

- Dynamically reconfigurable DMA channel Source and Destination

- Scatter-Gather DMA with dynamic DMA control per descriptor

- Circular queues for packet transmission and reception

- Fetching of up to 256 descriptors for optimized throughput

- Optional DMA channels Descriptor reporting for simplified software management

- Centralized DMA channel Context Memory for reduced footprint

QoS and Security Features

- Round robin fair bandwidth sharing between channels

- Configurable packet size for bandwidth sharing

- Non-blocking DMA channel operation

- Domain Isolation enforced with sideband signaling for identification and access control

- Synthesizable Verilog RTL (source code or encrypted source code)

- Software Design Kit

- Linux device driver (binary or source code)

- C API

- User’s manual

Advanced Design Integration Services:

- Customization of IP to add customer-specific features

- Generation of custom reference designs

- Generation of custom verification environments

- Design/architecture review and consulting