Optimizing Capacity, Connectivity and Capability of the Cloud

Home > CXL Memory Initiative

Data Center Challenges

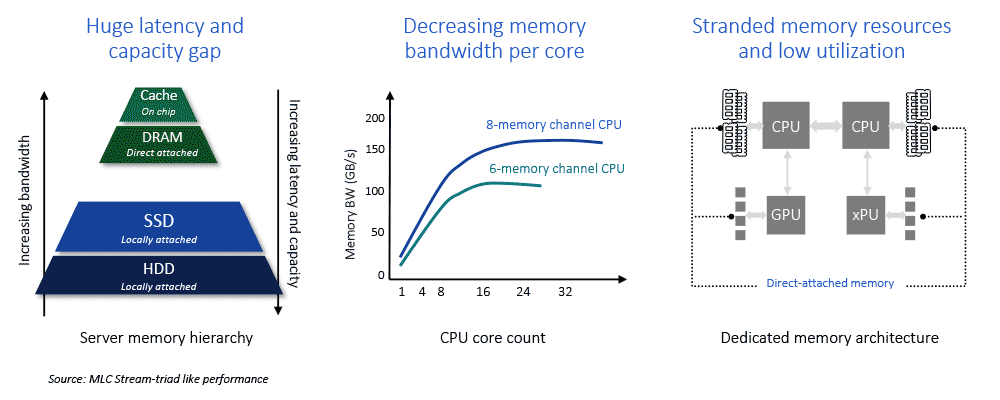

Data centers face three major memory challenges as roadblocks to greater performance and total cost of ownership (TCO).

The first of these is the limitations of the current server memory hierarchy. There is a three order of magnitude latency gap that exists between direct-attached DRAM and Solid-State Drive (SSD) storage. When a processor runs out of capacity in direct-attached memory, it must go to SSD, which leaves the processor waiting. That waiting, or latency, has a dramatic negative impact on computing performance.

Secondly, core counts in multi-core processors are scaling far faster than main memory channels. This translates to processor cores beyond a certain number being starved for memory bandwidth, sub-optimizing the benefit of additional cores.

Finally, with the increasing move to accelerated computing, wherein accelerators have their own directed attached memory, there is the growing problem of underutilized or stranded memory resources.

How CXL Technology will Revolutionize the Data Center

Meeting the Challenges with CXL

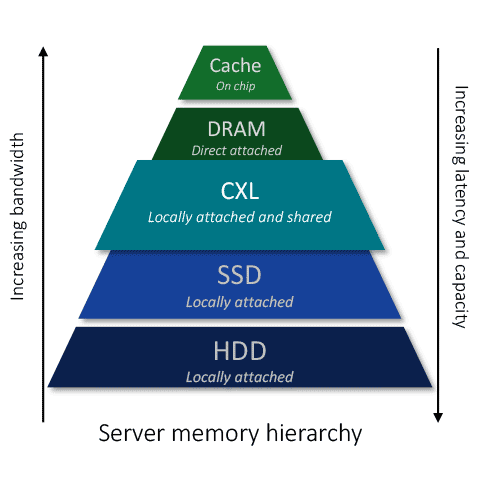

The solution to the data center memory challenges is a complimentary, pin-efficient memory technology that can provide more bandwidth and capacity to processors in a flexible manner. Compute Express Link™ (CXL™) is the broadly supported industry standard solution that has been developed to provide low-latency, memory cache coherent links between processors, accelerators and memory devices.

CXL leverages PCI Express®, which is ubiquitous in data centers, for its physical layer. With CXL, new memory tiers can be implemented which bridge the gap between direct-attached memory and SSD to unlock the power of multi-core processors.

In addition, CXL’s memory cache coherency allows memory resources to be shared between processors and accelerators. Sharing of memory is the key to tackling that stranded memory resource problem.

CXL Memory Expansion and Pooling

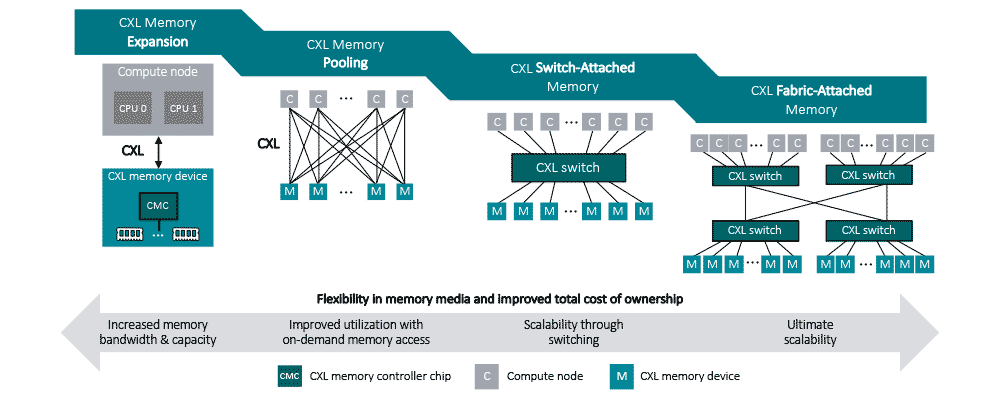

CXL enables a number of use models which deliver increasing levels of bandwidth and capacity for a tradeoff in latency. The first of these use models, or memory tiers, shown on the right in the figure below is memory expansion which provides increased bandwidth and capacity at a latency approaching that of direct-attached memory.

CXL memory pooling allows for multiple compute nodes (processors and accelerators) to access multiple CXL memory devices in an on-demand fashion providing flexible and efficient access to greater memory bandwidth and capacity. This same concept can be scaled out through CXL switching and fabrics.

Rambus CXL Memory Initiative

Through the CXL Memory Initiative, Rambus is developing solutions to enable a new era of data center performance and efficiency. This initiative is the latest chapter in the 30-year history of Rambus leadership in state-of-the-art memory and chip-to-chip interconnect products.

Chip solutions needed for CXL memory expansion and pooling require the synthesis of a number of critical technologies. Rambus expertise in memory and SerDes subsystems, semiconductor and network security, high-volume memory interface chips and compute system architectures will make possible the breakthrough CXL memory solutions for the future data center.

Rambus CXL Platform Development Kit

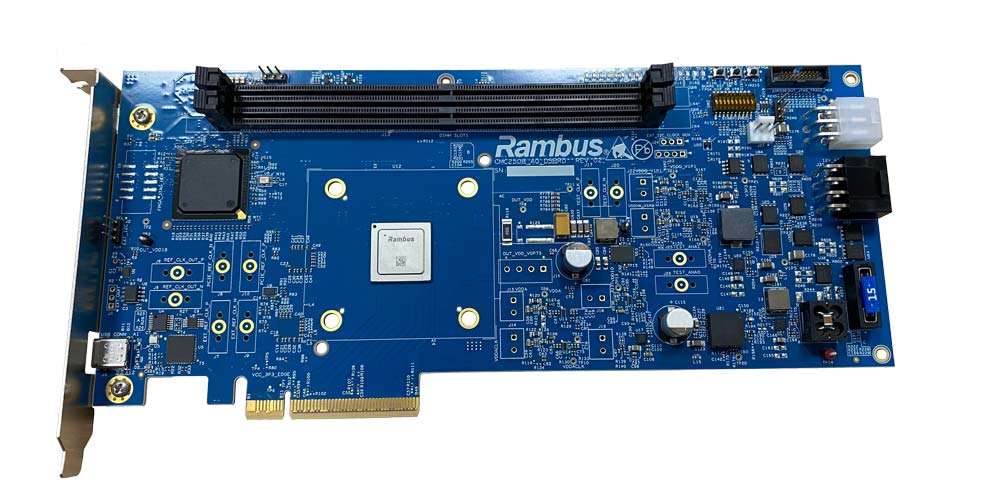

Rambus offers a CXL platform development kit (PDK) that enables module and system makers to prototype and test CXL-based memory expansion and pooling solutions for AI infrastructure and other advanced systems. The PDK is interoperable with CXL 1.1 and CXL 2.0 capable processors and memory from all the major memory suppliers. It leverages today’s available hardware to accelerate the development of the full stack of next-generation CXL-based solutions.

PDK Deliverables:

- CXL hardware platform (add-in card), featuring a prototype Rambus CXL memory controller

- Advanced debug and visualization tools

- CXL-compliant management framework and utilities

- Fully customizable SDK for value-added features and vendor-specific commands

For more information on the CXL PDK, please contact us.

CXL Memory Interconnect Initiative: Enabling a New Era of Data Center Architecture

In response to an exponential growth in data, the industry is on the threshold of a groundbreaking architectural shift that will fundamentally change the performance, efficiency and cost of data centers around the globe. Server architecture, which has remained largely unchanged for decades, is taking a revolutionary step forward to address the growing demand for data and the voracious performance requirements of advanced workloads.

Markets

Speed and Security for the Artificial Intelligence & Machine Learning Revolution

Catching a Tidal Wave of Data

Providing Performance & Security for the Connected Car

Securing Mission-critical Systems

Making IoT Data Safe & Fast