HBM2E Controller

Home > Interface IP > HBM Controller IP > HBM2E Controller

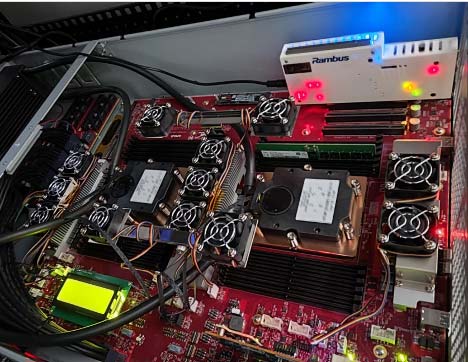

The Rambus HBM2E memory controller IP is designed for use in applications requiring high memory throughput including performance-intensive applications in AI, HPC and graphics.

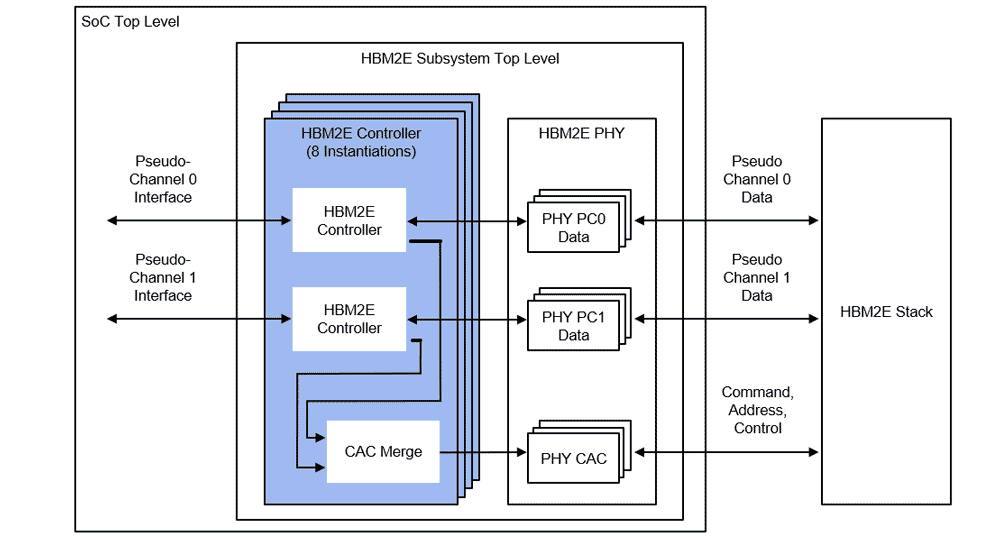

The HBM2E Interface Subsystem

HBM2E is a high-performance memory that features reduced power consumption and a small form factor. It combines a 2.5D/3D architecture with a 1024-bit wide interface operating at a lower clock speed (as compared to GDDR6) to deliver higher overall throughput at a higher bandwidth-per-watt efficiency for AI/ML and HPC applications.

The Rambus HBM2E controller supports both HBM2 and HBM2E devices with data rates of up to 3.6 Gbps per data pin. It supports all standard channel densities including 4, 6, 8, 12, 16 and 24 Gb. The controller maximizes memory bandwidth and minimizes latency via Look-Ahead command processing. The core is DFI compatible (with extensions added for HBM2E) and supports AXI or native interface to user logic.

The Rambus HBM2E controller is fully compliant with the JEDEC HBM2E JESD235 standard. It supports data rates up to 3.6 Gbps per data pin. The interface features 8 independent channels, each containing 128 bits for a total data width of 1024 bits. The resulting bandwidth is 461 GB/s per HBM2E memory device containing 2, 4, 8 or 12 3D-stacked DRAM.

The Rambus HBM2E controller together with the customer’s choice of PHY comprise a complete HBM2E memory interface subsystem.

HBM3E Memory: Break Through to Greater Bandwidth

Delivering unrivaled memory bandwidth in a compact, high-capacity footprint, has made HBM the memory of choice for AI training. HBM3 is the third major generation of the HBM standard, with HBM3E offering an extended data rate and the same feature set. The Rambus HBM3E/3 Controller provides industry-leading performance to 9.6 Gb/s, enabling a memory throughput of over 1.23 TB/s for training recommender systems, generative AI and other compute-intensive AI workloads.

Solution Offerings

- Supports HBM2E and HBM2 devices

- Supports all standard HBM2/2E channel densities (4, 6, 8, 12, 16, 24 Gb)

- Supports data rates of up to 3.6 Gbps/pin

- Can handle two pseudo-channels with one controller or independently with two controllers

- Queue-based interface optimizes performance and throughput

- Maximizes memory bandwidth and minimizes latency via Look-Ahead command processing

- Achieves high clock rates with minimal routing constraints

- Full run-time configurable timing parameters and memory settings

- DFI compatible (with extensions added for HBM2)

- Full set of Add-on cores available

- Supports AXI or native interface to user logic

- Support for HBM2/2E RAS features

- Built-in hardware -level performance Activity Monitor

- Delivered fully integrated and verified with target PHY

- Core (source code)

- Testbench (source code)

- Complete documentation

- Expert technical support

- Maintenance updates

- Customization

- SoC Integration

Protocol Compatibility

| Protocol | Data Rate (Gbps) Max. | Application |

|---|---|---|

| HBM2E | 3.6 | AI/ML, HPC and Graphics |

| HBM2 | 2 | AI/ML, HPC and Graphics |