Rapid advances in CPU clock speeds and architectural techniques such as pipelining and multithreading have placed increasing demands on memory system bandwidth with each new generation of computer systems. As the processor-memory performance gap continues to grow, succeeding generations of computer systems will increasingly become limited by their memory systems, in particular by memory system and DRAM bandwidth. Core prefetch improves DRAM interface bandwidth while allowing the core to operate at a lower frequency by running the core at a reduced speed compared to the interface and transferring multiple bits of data at a time to make up for the difference in transfer speed.

- Improves memory system bandwidth while maintaining a low DRAM core frequency

- Improves yield of high-bandwidth DRAM

- Reduces memory packaging and system costs

What is Core Prefetch Technology?

Providing high memory bandwidth is a challenging problem, and doing so within the constraints of high-yield, high-volume manufacturing adds to this challenge. A key aspect of increasing memory system bandwidth is boosting the rate of data transfer between the DRAM interface and the DRAM core where data is stored. In the early 1990s, an important innovation that Rambus developed – core prefetch – allowed this data transfer rate to increase. Core prefetch lowers the cost of providing high bandwidth and supplies headroom for further bandwidth improvements.

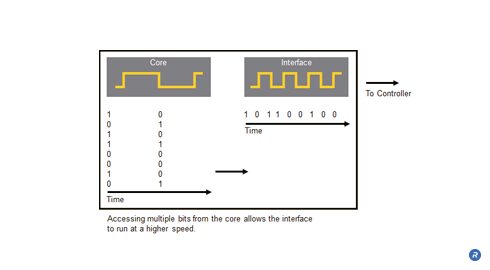

A fundamental problem with increasing DRAM bandwidth is increasing the data transfer rate between the DRAM interface and the DRAM core. One possibility is to increase the frequency of the DRAM core to match that of the DRAM interface. However, this introduces additional circuit complexity, increases die size, and raises DRAM power consumption, resulting in higher manufacturing cost and lower yield. Core prefetch takes a different approach to solving this problem by allowing the DRAM core to run at a reduced speed compared to the DRAM interface. To match the bandwidth of the interface, each core access transfers multiple bits of data from the core to make up for this difference in transfer speeds. In this manner, core prefetch lets DRAM bandwidth increase, even if the DRAM core is limited to operating at a lower speed.

Who Benefits?

Core prefetch benefits many different groups by lowering the cost to achieve high DRAM bandwidths. DRAM manufacturers benefit from higher yields brought about by running the core at a lower speed, increasing the number of good DRAM devices in a given manufacturing run. The ability to supply a given level of bandwidth with fewer DRAMs reduces the number of controller pins and packaging costs for controller designers. Fewer DRAMs also enables system integrators to decrease their bill of materials costs and allows for smaller system form factors. Finally, consumer benefits from the lower system costs through higher DRAM yields, reduced packaging costs and a reduced number of required DRAM for a given performance level.