Home > Interface IP > GDDR Memory Controller IP

GDDR Memory Controller IP

Rambus GDDR6 and GDDR7 controllers provide high-bandwidth, low-latency memory performance for AI/ML, graphics and HPC applications.

| Version | Maximum Data Rate (Gb/s) | Controller |

|---|---|---|

| GDDR7 | 40 | |

| GDDR6 | 24 |

GDDR6 and GDDR7 Controller IP

| Feature | GDDR7 Controller | GDDR6 Controller |

|---|---|---|

| Speed Per Pin (Gb/s) | Up to 40 | Up to 24 |

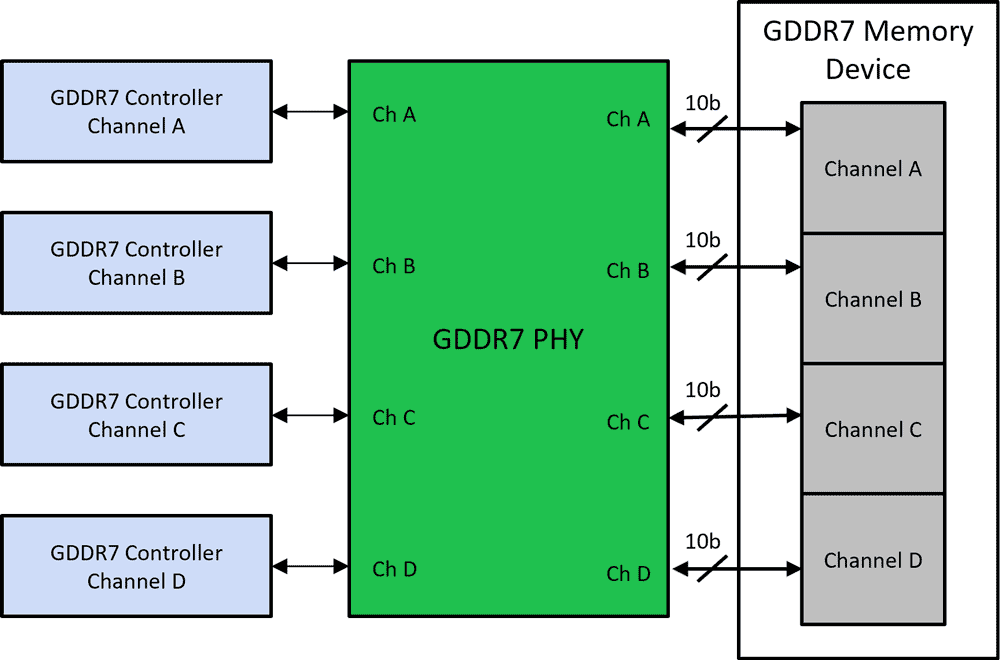

| Channels | 4 | 2 |

| Channel Width (bits) | 10 | 16 |

| Queue-Based Interface | Yes | Yes |

| Look-Ahead Command Processing | Yes | Yes |

| Clamshell Mode | Yes | Yes |

| Logic Interface | AXI or Native | AXI or Native |

| Controller Interface | DFI Compatible | DFI Compatible |

| Add-On Cores | Multi-Port Front-End Read-Modify-Write Memory Test Memory Test Analyzer | In-line ECC Multi-Port Front-End Reorder Read-Modify-Write Memory Test Memory Test Analyzer |

GDDR7 Memory Subsystem

Originally designed for graphics applications, GDDR is a high-performance memory solution that can be used in a variety of compute-intensive applications.

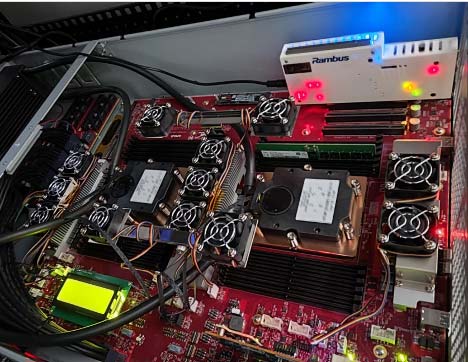

The Rambus GDDR7 controller supports both PAM3 and NRZ signaling and is optimized for high efficiency and low latency across a wide variety of traffic scenarios. It offers low-power support and robust Reliability, Availability and Serviceability (RAS) features. Comprehensive memory test support and integration support for third-party PHYs is available to instantiate a complete GDDR7 memory subsystem.

Supercharging AI Inference with GDDR7

A rapid rise in the size and sophistication of AI inference models requires increasingly powerful AI accelerators and GPUs deployed in edge servers and client PCs. GDDR7 memory offers an attractive combination of bandwidth, capacity, latency and power for these accelerators and processors. The Rambus GDDR7 Memory Controller IP offers industry leading GDDR7 performance of up to 40 Gbps and 160 GB/s of available bandwidth per GDDR7 memory device.

- Controller (source code)

- Testbench (source code)

- Complete documentation

- Expert technical support

- Maintenance updates

- Customization

- SoC Integration

HBM3E and GDDR6: Memory Solutions for AI

AI/ML changes everything, impacting every industry and touching the lives of everyone. With AI training sets growing at a pace of 10X per year, memory bandwidth is a critical area of focus as we move into the next era of computing and enable this continued growth. AI training and inference have unique feature requirements that can be served by tailored memory solutions. Learn how HBM3 and GDDR6 provide the high performance demanded by the next wave of AI applications.