In part one of this two-part blog series, Steven Woo, Rambus fellow and distinguished inventor, spoke with Ed Sperling of Semiconductor Engineering about utilizing various number formats to optimize memory bandwidth for artificial intelligence (AI) applications. In this blog post, we’ll be taking a closer look at the impact specific number formats have on performance and accuracy.

“At Hot Chips 29 (2017), Microsoft highlighted how the performance of certain neural network engines improved dramatically when they reduced the precision of the numbers they were working with,” Woo explains.

“In [one] particular case, Microsoft [went] from 16-bits down to 8-bits and saw improvements for both the Stratix 5 (3x) and Stratix 10 (7x).” Woo elaborates. “So now the question becomes: since I’m better able to use my memory bandwidth [by] packing more numbers into it, am I losing accuracy?”

According to Woo, when Microsoft trained with full 32-bit floating-point numbers, they reported a reference level of accuracy of 1.0. However, when Microsoft dropped all the way down to its fp9 format (9 bits), accuracy levels decreased slightly.

“[However], Microsoft showed that through retraining and changes to the algorithm, accuracy could be gained back – and in some cases, with even better accuracy than their reference 32-bit implementations,” says Woo. “[This example highlights how] memory bandwidth is considered so important and precious. [Indeed, system designers] are willing to change their algorithms to make the best use of what’s widely viewed as the scarcest resource for these types of applications.”

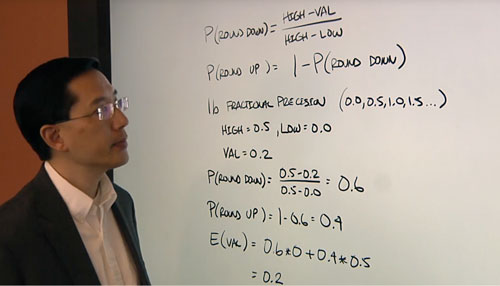

In addition to highlighting Microsoft’s fp9 format, Woo discusses an extreme scheme that allows for only one bit of fractional precision. In practical terms, this means only numbers that end in .0 or.5 can be encoded such as 0.0, 0.5, 1.0, and 1.5. However, the above-mentioned scheme is understandably limited.

“And so researchers developed an interesting idea called stochastic rounding where you probabilistically round up or down – depending on what values you can represent and what the value of your number is,” Woo elaborates.

“This example [above] shows the probability that I’m going to round my number down to zero is dependent on whatever the highest value is, the fraction that I can represent, the value I’m trying to encode, and then the range between the highest and lowest values that I can encode with my fractional precision. The probability that I round that number up is just one minus the probability I’m rounding down.”

To highlight how stochastic rounding works in practice, Woo provides an example based on one-bit fractional precision.

“With one-bit fractional precision my high value is 0.5 and my low value is 0.0 and so the value I really want to encode is 0.2. So, the probability that I’m rounding my number down is just the high minus the value which is 0.5 minus 0.2 divided by that range: 0.5 minus 0.0. So, it’s a 60% chance that I’m going to round my number down,” he states. “In the case of rounding up, it’s just one minus that fraction which is 40%. So, 40% of the time I would take my 0.2 and round it all the way up to 0.5. 60% of the time, I take my 0.2 and round it all the way down to zero. So, my expected value in the long run is just 60% x 0 (the number that I’m rounding down to) + 40% x 0.5 (the number that I’m rounding up to). The long-term average – my expected value – is at 0.2 which is exactly what we wanted to encode.”

As Woo notes, stochastic rounding can be an effective technique when there are multiple trials and sufficient data to avoid deviation.

“You have to make sure you’re able to achieve kind of the long-term averages for whatever calculations you’re doing. So, the more data [you have], the more accurate this becomes. The more opportunity you have to employ something like this, the better it’s going to work,” he adds.

Stochastic rounding, says Woo, can be leveraged by systems that require high levels of power efficiency.

“For example, inferencing on battery-operated endpoints for IoT type applications [requires system designers to delicately] balance performance against using the minimal amount of memory, storage and bandwidth,” he explains. “Being able to use very small numbers with very few bits of fractional precision can really help.”

However, Woo emphasizes that a holistic design approach is needed for AI systems and applications, as

stochastic rounding represents only one optimization technique.

“With [a] co-design [approach], the entire system should be thought of not only from the hardware side, but the software side as well, and how you use your data and solution. A whole host of techniques are being employed to [try and] compensate for the fact that you can’t get as much memory bandwidth as you want – and the techniques that are in use today aren’t necessarily as power efficient as people would like. So, techniques like stochastic rounding help you to get around these types of things. Our industry just needs to continue working hard and coming up with new methods to continue advancing AI,” he concludes.

Leave a Reply