In part one of this three-part series, Semiconductor Engineering Editor in Chief Ed Sperling and Suresh Andani, Senior Director, Product Marketing and Business Development at Rambus, discussed the evolving PCIe specification. In this blog post, Sperling and Andani explore early market adoption of PCIe 5, as well as the networking environment the specification will support in the data center.

According to Andani, the enterprise and cloud data centers will be early adopters of PCIe 5. However, PCIe 5 will ultimately be adopted at the edge to support an increasing number of low-latency and time-sensitive applications.

“I do expect to see PCIe 5 adoption very soon – about in a year or so – within the edge data centers as well,” he adds.

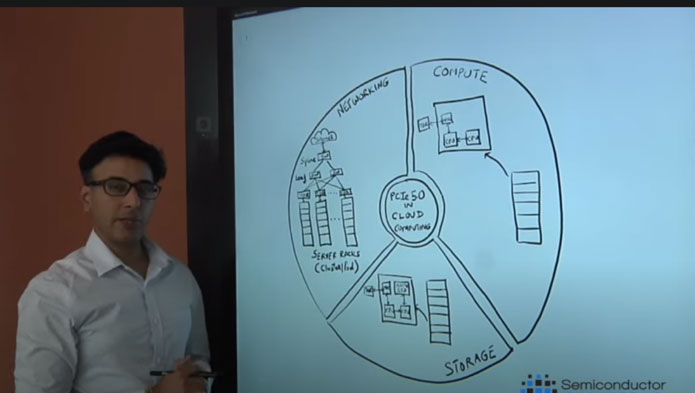

To illustrate the need for PCIe 5, Andani highlights various aspects of a typical hyperscale data center, pinpointing where the interface will be deployed.

“There are three main elements to a hyperscale data center: networking, compute and storage. What you see [shown in the network section of the image below] is a very typical cloud architecture, also known as the leaf-spine architecture of a data center. At the base node of this architecture you will see racks of servers and these racks are combined to form clusters,” he explains.

“The base computation, the processing, basically happens in these servers. As workloads become more sophisticated, there is a lot of east/west traffic. The servers, the applications, are running on multiple servers that are located within a rack – and traffic can also be exchanged between servers across racks and clusters. A lot of traffic flows in the east/west direction – from a rack to another rack.”

Workloads such as AI/ML training have grown so large, it needs to be performed over many servers. As Andani points out, this flow of data is enabled by the network switch fabric.

“At the base level, you see the servers, then the top of rack (ToR) switch which is responsible for data traffic exchange within a rack. Connecting these tops of the rack switches are the leaf switches that enable data traffic between racks within a cluster. When you go one layer up, there is a spine switch that enables traffic to flow between one cluster to the other clusters within a data center,” he continues.

“At the front panel of the top of the racks switch are the Ethernet QSFP nodes connecting to the standard two socket server with an Ethernet link – and there is a network interface card (NIC) sitting on the server that connects the two. So, this link between the ToR switch and the server, the NIC of the server, is Ethernet. However, the traffic that originates from the network has to be passed on to the CPU for processing and that happens over PCIe.”

Data centers, says Andani, are moving from 40G Ethernet server networking to 100G. The upgrade to 400G is just beginning and a transition to 800G will follow.

“If you look at 100G Ethernet servers, that is a total bandwidth of 200 gigabits per second (Gbps). If you convert that into gigabytes per second (GB/s), this translates to roughly 30 GB/s. Now 30 GB/s coming from the ToR switch to the NIC can easily be served with a PCIe 4 (x8), which is 32 GB/s (so that was not the bottleneck),” he states. “However, as we move from 100G ethernet networking to 400G networking between the ToR and the server, we can do the math again. 400G in full duplex is equal to 800 Gbps. If you divide by eight, that is about 100 GB/s.”

According to Andani, 100 GB/s traffic between the ToR and the server cannot be realistically served with PCIe 4. This is because the maximum bandwidth that PCIe 4 can support is 64 GB/s, which is well short of what is needed to support 400G Ethernet.

“This is where we need to get to PCIe 5 (x16). If you look at PCIe 5 (x16), it provides an aggregate duplex bandwidth of 128 GB/s, enough to service 100 GB/s (required by 400G Ethernet),” he adds.

As Andani confirms, this paradigm is the same for both an Exascale cloud operation and on-premises enterprise servers.

“On-premise data centers are looking more like hyperscale or cloud data centers because the type of processing that some big enterprises need to get done is at the same level. However, they do it on- premise because of security concerns. Nevertheless, at the end of the day, the workloads are just as intensive. For the cloud, you will observe slightly more intensive workloads, but the cloud will likely trailblaze PCIe 5 and then enterprise will soon follow,” he concludes.

Leave a Reply