Semiconductor Engineering Editor in Chief Ed Sperling recently spoke with Rambus Sr. Director of Product Management Frank Ferro about designing high-performance memory subsystems for artificial intelligence (AI), machine learning (ML), and high-performance computing (HPC) applications.

As Ferro notes, there is plenty of compute (CPU) power available today to support the above-mentioned markets.

“[However], the advances in computing are now outstripping the ability to feed those [compute] engines with memory. [So, we are often asked (by customers) how to solve this memory bottleneck]– how do we keep all these compute engines fed for applications like HPC and AI/ML?”

More specifically, says Ferro, the above-mentioned bottleneck is in the memory subsystem, with compute speeds routinely outstripping the memory.

“In the past, system designers had limited choices for their memory subsystem. Essentially, DDR4 was the only choice for a time. [Since DDR4 could only hit a max speed of 3.2Gbps, it gave rise to the need for new solutions],” he explains. “High bandwidth memory (HBM), which was one of the first to emerge, is based on DDR technology. It runs at about 3.2Gbps today and gives you the same DDR technology – only with a very wide interface.”

Another memory type based on DDR, says Ferro, is GDDR6 which was originally created for the graphics market some 20 years ago. GDDR has undergone several major evolutions, with the latest iteration running at 16Gbps. In addition, LPDDR5, which has broken out of the mobile market, is running at speeds of 6.4 Gbit/s/pin.

“Designers can now look at all these different technologies to architect the memory subsystem. Depending on the application, any of these – HBM2E, GDDR6, and LPDDR – could potentially be a good solution to keep those CPUs fed with data.”

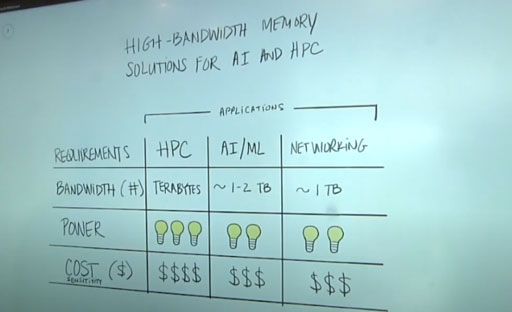

Selecting the most appropriate memory type, continues Ferro, depends on multiple tradeoffs that are illustrated in the image below.

“There are a number of applications that require HPC capabilities in the cloud. For example, HPC is often a key requirement for complex applications such as genome sequencing and graphics rendering,” Ferro states. “The trade-off for memory to support HPC applications is paying more in terms of power consumption, as well as dollars to access the highest levels of performance computing.”

For AI/ML, says Ferro, there are several market segments, including training with large, complex data sets that can take weeks to process and refine.

“Nevertheless, the tradeoffs for AI/ML memory can be more practical, as these applications typically require one to two terabytes of bandwidth. Power consumption is one of the biggest expenses in the data center, so a more balanced approach makes sense,” he explains. “Certainly, the more processing power you can access in the data center the better. At the same time, you must be conscious of the power and cost elements. Even within the data center you see several market segments where accelerator cards are limited by power. You have some cards that are down as low as 75 watts, but you’ll also have cards that are up to 250 to 300 watts – so you can draw on additional power for those highest performance applications.”

Ferro also notes that although networking isn’t typically associated with high bandwidth computing, the increase of in-line processing means that companies can no longer rely completely on the cloud.

“As you go through the edge of the network all the way up through the cloud, you’re going to want to do processing in-line. So that is why we are now seeing networking applications that require very high bandwidth,” he adds.

Leave a Reply