Introduction

What’s new about PCI Express 5 (PCIe 5)? The latest PCI Express standard, PCIe 5, represents a doubling of speed over the PCIe 4.0 specifications.

We’re talking about 32 Gigatransfers per second (GT/s) vs. 16GT/s, with an aggregate x16 link duplex bandwidth of almost 128 Gigabytes per second (GB/s).

This speed boost is needed to support a new generation of artificial intelligence (AI) and machine learning (ML) applications as well as cloud-based workloads.

Both are significantly increasing network traffic. In turn, this is accelerating the implementation of higher speed networking protocols which are seeing a doubling in speed approximately every two years.

You can find much more about PCIe 5 in the article below.

Table of contents

1. PCI Express: Frequently Asked Questions (FAQ)

2. PCIe 5 – A New Era

3. PCIe 5 vs. PCIe 4 (+Comparison table included)

4. PCIe 5: Applications and Market Adoption

5. Complete PCIe 5 Interface Solutions from Rambus

6. Conclusion

PCI Express: Frequently Asked Questions (FAQ)

Let’s answer five frequently asked questions about PCI Express and PCIe 5.

a. What is PCI Express 5?

With the preliminary specification announced in 2017, PCIe 5 is a high-speed serial computer expansion bus standard that moves data at high bandwidth between multiple components. The PCIe 5.0 specification was formally released in May of 2019.

You might be wondering why a new PCI Express standard like PCIe 5 is needed. Well, PCIe 5 offers twice the data transfer rate of its PCIe 4 predecessor, delivering 32 GT/s vs. 16 GT/s. This speed increase is critical to support new AI/ML applications and cloud-centric computing.

b. Why both GT/s and GB/s?

GT/s is a measure of raw speed – how many bits can we transfer in a second. The data rate, on the other hand, has to take into consideration the overhead for encoding the signal. Bandwidth is data rate times link width, so encoding overhead’s impact on the data rate translates directly to an impact on bandwidth.

Back in the days of PCIe 2, the encoding scheme was 8b/10b, so there was a hefty overhead penalty for encoding. With such a high overhead, it was particularly useful to have measures of transfer rate (x GT/s) and data rate (y Gbps), where “y” was only 80% of “x.”

With Gen 3 and continuing through to the present Gen 5, the PCI Express standard moved to a very efficient 128b/130b encoding scheme, so the overhead penalty is now less than 2%. As such, the link speed and the data rate are roughly the same.

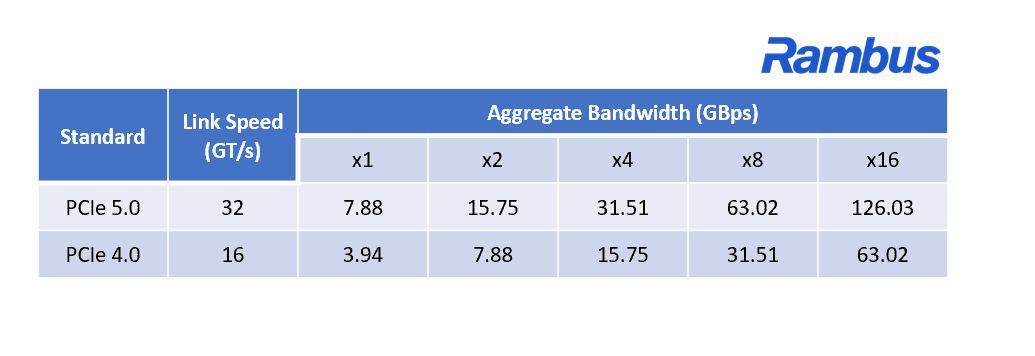

For a PCI 5 x8 link, 32 GT/s raw speed translates to 31.5 GB/s bandwidth (we chose a x8 link so we could go straight from bits to bytes). And since PCIe is a duplex link, total aggregate bandwidth rounds to 63GB/s (32GT/s x 8 lanes / 8 bits-per-byte x 128/130 encoding x 2 for duplex).

c. What is a PCI Express lane?

So what’s a PCI Express lane? Well, a PCIe lane consists of four wires to support two differential signaling pairs. One pair transmits data (from A to B), while the other receives data (from B to A). Want to know the best part? Each PCIe lane is designed to function as a full-duplex transceiver which can simultaneously transfer 128-bit data packets in both directions.

d. What does PCIe x16 mean?

We’ve discussed lanes, but what do they have to do with x16? Well, the term “PCIe x16” is used to refer to a 16-lane link instantiated on a board or a card. Physical PCIe links may include 1, 2, 4, 8, 12, 16 or 32 lanes. The 32-lane link is a pretty rare beast, so in practical terms the x16 represents the top end of the PCI Express link options.

e. What is PCI Express used for?

We’ve talked a lot about PCIe 5, but what is PCI Express actually used for?

You can think of the PCIe interface as the system “backbone” that transfers data at high bandwidth between various compute nodes. What’s the bottom line? Put simply, PCIe 5 rapidly moves data between CPUs, GPUs, FPGAs, networking devices and ASIC accelerators using links with various lane widths configured to meet the bandwidth requirements for the linked devices.

PCIe 5 vs. PCIe 4

Here’s a handy by-the-numbers comparison of PCIe 5 vs. PCIe 4 with the actual aggregate (duplex) bandwidth adjusted for the encoding overhead.

PCIe 5: Applications & Market Adoption

AI/ML and Cloud Computing

No surprise, PCIe 5 is the fastest PCI Express ever. While the speed upgrade makes the applications of today run faster, what’s particularly exciting is that PCIe 5 is enabling new applications in markets such as AI/ML and cloud computing.

AI applications generate, move and process massive amounts of data at real-time speeds. An example is a smart car which can generate as much as 4TB of data per day!

But that’s not all, the size of AI/ML training models are doubling every 3-4 months. The torrent of data, and the rapid growth in training models is putting tremendous stress on every aspect of the compute architecture, with interconnections between devices and systems being of critical importance. Also critical is fast access to memory as AI/ML workloads are extremely compute intensive.

But while AI/ML is one major megatrend, there are others. Data centers are changing, with enterprise workloads moving to the cloud at a rapid pace. Those applications mean moving more data, often with real-time speed and latency.

This shift to the cloud, along with ever-more sophisticated AI/ML applications, is accelerating the adoption of higher speed networking protocols that are experiencing a doubling in speed about every two years: 100GbE ->200GbE-> 400GbE.

Now this is where PCI express 5 comes in. PCIe 5 delivers duplex link bandwidth of almost 128 GB/s in a x16 configuration. Put simply, PCI express 5 effectively addresses the demands of AI/ML and cloud computing by supporting higher speed networking protocols as well as higher speed interconnections between system devices..

Complete PCI Express 5 Digital Controller Solutions from Rambus

Rambus offers a highly configurable PCIe 5.0 digital controller.

The Rambus PCIe 5.0 Controller can be paired with 3rd-party PHYs or those developed in house. Rambus can provide integration and verification of the entire interface subsystem.

Conclusion

In “PCI Express 5 vs. 4: What’s New?” we explain how PCI Express is the system backbone that transfers data at high bandwidth between CPUs, GPUs, FPGAs and ASIC accelerators using links of variable lane widths depending on the bandwidth needs of the linked devices.

We also detail how the latest PCI Express standard, PCIe 5, represents a doubling over PCIe 4 with a raw speed of 32GT/s vs. 16GT/s translating to total duplex bandwidth for a x16 link of ~128 GB/s vs. ~64 GB/s.

We then explored how the higher data rates of PCIe 5 are enabling system designers to support a new generation of cloud computing and AI/ML applications.

Explore more primers:

– Hardware root of trust: All you need to know

– Side-channel attacks: explained

– DDR5 vs DDR4 – All the Design Challenges & Advantages

– Compute express link: All you need to know

– MACsec Explained: From A to Z

– The Ultimate Guide to HBM2E Implementation & Selection

Leave a Reply